HTTPS inspection is a function provided by many Internet security vendors marketed at schools and businesses since it promises to remove encryption from web traffic and ensure that everything is visible to the filtering system where it can be checked for malware or flagged for inappropriate content. This worked fairly well a decade ago but in the post-Snowden era of pervasive encryption and advancing Internet security standards, this cannot deliver what it once promised and instead puts us and our networks at risk.

Encryption: a double edged sword for schools

My last post laid the foundations of why we spend so much time thinking about security in education. In short it’s because schools are not businesses so it’s not a case of ‘one rule fits all’; finding a balance between safety and functionality when your user base has such vastly differing requirements is tricky.

An essential aspect of our response is ringfencing Internet access to provide basic duty-of-care protections (ensuring age-appropriate content) and to prevent harmful actions (cyberbullying, illegal activity, etc.) perpetrated by or upon a student. Some protections are universal while some must adapt based on the age of the student and their educational needs. All must be airtight (infeasible for a student to bypass) and visible (logged by a system for analysis / alerting).

End-to-end encryption such as that based in TLS (what we still refer to as SSL) defeats both of these measures. It allows a bypass of network-based filtering and inspection since the ciphertext exchanged over the wire is indistinguishable from entropy – it’s just noise.

The way most schools work around this is by deploying a network ‘middlebox’ such as an an NGFW or proxy that inserts itself into the communications channel. The client sets up an encrypted connection to the middlebox and it in turn sets up an encrypted connection to the client’s destination so it retains visibility of everything that transits back and forth.

So after years of plain sailing, why has this ship run aground?

Breaking the unbreakable breaks things

The Internet has evolved a plethora of security and privacy standards in recent years prompted by a number of factors such as widespread state-sponsored snooping and increasing cybercrime. Some are specifically designed to defeat the kind of inspection needed in a school context, such as:

- TLS 1.3 - Around for years but only recently adopted broadly, it includes a number of changes such as encrypting the server certificate, rendering passive inspection inoperable.

- Certificate pinning (in applications) - Developers pre-install the certificates their app will trust so it isn’t vulnerable to a man-in-the-middle attack but this breaks all inspection.

- Certificate pinning (on web via HPKP) - Clients cache public key details from sites, so if a client accesses a site from a non-inspected connection it will then later refuse to connect when inspected.

Others are designed to raise the bar on validating that a site is genuine, such as:

- Certificate transparency - A public log of all issued certificates designed to make it easier to find illegitimate certs.

- OCSP stapling - Improves revocation check timeliness and accuracy.

- HTTP strict transport security (HSTS) - Forces clients to use TLS/SSL.

- 398 day issuance - All public CAs now only issuing certs with one-year validity.

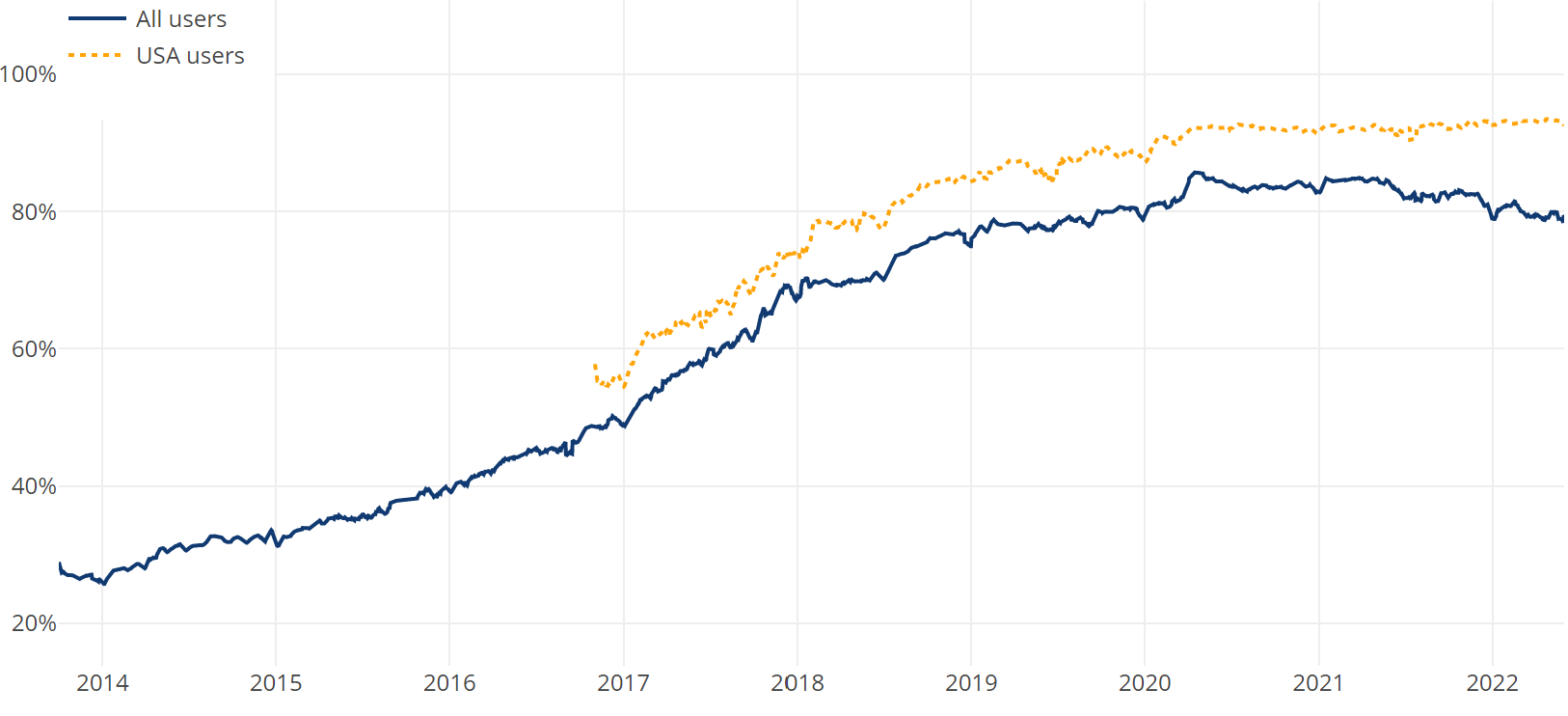

These changes have driven a seismic shift to encrypted connections, as can be seen in the graph below (from LetsEncrypt):

More traffic is encrypted and more encryption cannot be broken without also breaking the apps and services that rely on it.

We now live with massive and ever-expanding manually configured whitelists of IPs and domains that we need our inspection to not-mess-with in order not to break things.

The infosec luminary @SwiftOnSecurity minced no words to this effect in a recent post, to which my reply garnered some interest:

I made the point that as many of our apps and services are cloud-based and hosted in AWS or Azure in shared infrastructure, our whitelists are now no longer specific to that one app. The more broadly we whitelist, the lower the visibility of traffic and the higher the likelihood students (or malware) will leverage an accidentally-permitted destination to work around restrictions. Middlebox vendors claim that their profiling or ‘fingerprinting’ technologies (matching traffic to an app or service based on known patterns) ensures whitelisting is accurate, but regardless of whether we believe that there remains a greater problem.

Middleboxes are broken (still)

A few years back a paper was written by some researchers out of Berkeley and Stanford that never gained the publicity it should have. Entitled ‘The Security Impact Of HTTPS Inspection’, it summarised their detailed look at a popular array of middleboxes and their findings were nothing short of ghastly.

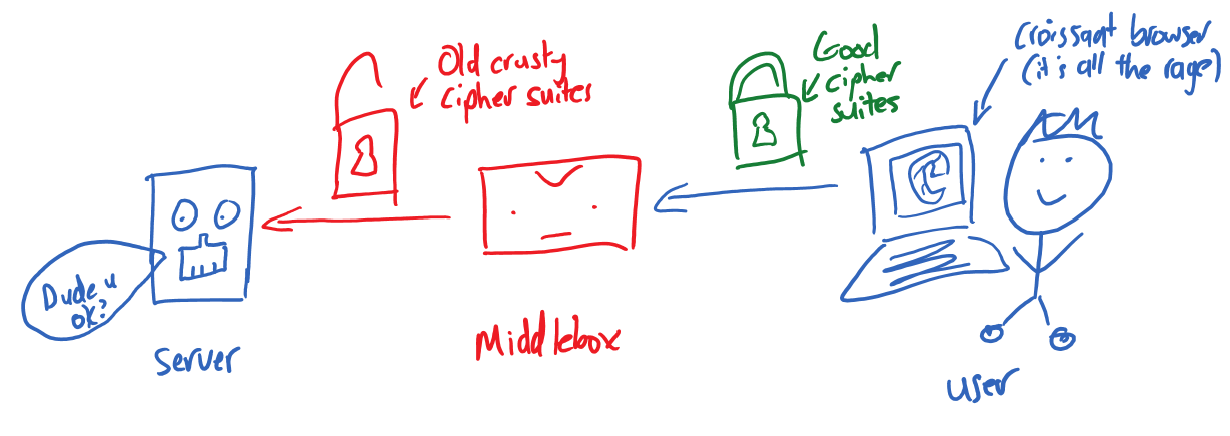

Internet security protocols are constantly evolving as flaws are discovered and mitigated. At certain points in time the industry agrees to drop older standards (cipher suites) so as not to leave unwary users exposed to known vulnerabilities. Each time a client connects to a server, they negotiate a cipher suite they both support, with the intent that even older clients that don’t support the latest standards can connect. Unfortunately breaking the HTTPS connection means that the client only ever performs that negotiation with the middlebox and never sees the remote server. I’ve drawn a handy diagram of this problem:

Since it’s the middlebox that negotiates a cipher suite with the destination server, it needs to be certain it can find a match. If it fails to negotiate, there’s no way for it to explain that to the client as it is on an entirely separately-negotiated connection. Regardless, middlebox vendors don’t want to be known for breaking connections, so they often use the widest possible set of cipher suites, in many cases including those that have been deprecated and have known security vulnerabilities. This exposes clients to security downgrade attacks where a malicious agent reduces the security of the connection to enable them to take advantage of those vulnerabilities to interrupt, intercept or modify the traffic.

If you are concerned that your middlebox is insecure, check the Qualys Browser Test page from behind it; remember that this is not testing your browser since it’s not making the connection directly.

Another problem that results from removing the direct connection between client and server is that it can’t perform certificate revocation checks, nor process HPKP. The authors hit the nail on the head when they say:

“If we expect browsers to perform this additional verification, proxies need a mechanism to pass connection details (i.e., server certificate and cryptographic parameters) to the browser. If we expect proxies to perform this validation, we need to standardize these validation steps in TLS and implement them in popular libraries.”

Essentially the middlebox vendor doesn’t want their system to be doing anything it doesn’t have to; it’s processing massive amounts of traffic and being benchmarked against their competition on the overhead it imposes. Adding validation checks to every connection will slow things down and they don’t want that. To fix this we must either create a side-channel between the middlebox and the client for relaying telemetry or preferably build validation into the TLS protocol itself, so that the middlebox cannot avoid doing it. Unfortunately in the years since this paper has been published, neither of these has happened to my knowledge.

RIP SSL inspection 🪦

I’d say that all of this points to the death of application-layer network traffic inspection as the sole point of control over client devices. We certainly still need it for the 60% of traffic we haven’t whitelisted but the remaining load must now be shunted either lower (down into DNS) or higher (onto the client device itself). The problem is, there aren’t many solutions that encompass this diverse set of systems; we end up dealing with separate logs and policies, piecing things together when there’s a problem. When I’ve found a good answer I’ll be sure to write it up!