I’ve put this post together as an update for a number of schools who have asked me how we’re going on our Private Preview of MCC. I’ll start with a bit of background though, for anyone new to this.

Info

30/10/24: I never thought it would take this long but Microsoft have finally today released MCC into public preview with new telemetry reports and a Windows node deployment option (via WSL). The technical specifics in this post are no longer aligned to the latest version so don’t look for guidance here; if I publish an update in future I’ll do so in a separate post.

You had me at DOINC

The best product acronym Microsoft ever devised was DOINC, for the Delivery Optimisation In-Network Cache. This was a service that would store local copies of Windows and Office apps and updates so that client devices could access a fast, consistently-connected local copy rather than downloading from peers or over the Internet. That’s particularly important in an education context where bandwidth and battery life are at a premium and where we routinely set up hundreds of devices in the space of a few days.

At its release, DOINC had more in common with Apple’s Content Cache than Microsoft’s existing WSUS (Windows Server Update Services) with its minimal configuration and low management overhead. The kicker was that it required Config Manager, the large-footprint MDM server used by organisations with on-prem and hybrid infrastructure. Cloud-first orgs like mine using Intune for MDM were left hoping for a standalone version. This was announced to great fanfare at Ignite in 2019, named Microsoft Connected Cache.

I jumped on this, signed up for information and pestered everyone I knew at Microsoft frequently about it, all to no avail. Eventually after two years of waiting, MCC was made generally available – for Config Manager 😨

There was some wailing and gnashing of teeth from my end of the room and more declarations that Microsoft had again forgotten about their cloud-first customers (that seems to be a running thread through these posts) but to their credit they got in touch shortly afterwards and directed me to the private preview of the standalone version.

Preview – The Results

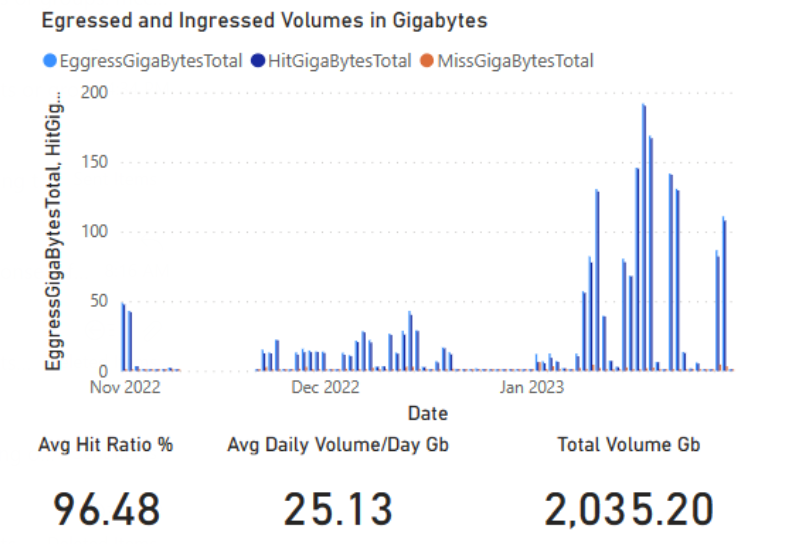

I installed our server in November, targeting our peak period of device rollout in January for the start of the Australian academic year. The stats are encouraging, though I am presenting the ideal scenario of many similar devices all requesting similar content within a short space of time (you can tell it’s a preview since ’egress’ isn’t spelled correctly on the graph!):

The cache was serving almost 200GB of daily traffic at peak and over 96% was from cache on average. We deployed several hundred devices using Autopilot through OOBE up to the login screen. The bulk of this cached data was Intune apps and minor updates as we were already deploying from an image built with the Surface Deployment Accelerator using the 22H2 update of Windows 11.

Preview – Setup Process

You don’t need to be in the preview to see the documentation - it’s available on Microsoft Docs or at aka.ms/MCC-Enterprise-Program-Information. It currently comes with a warning that it’s a preview feature and may change in future.

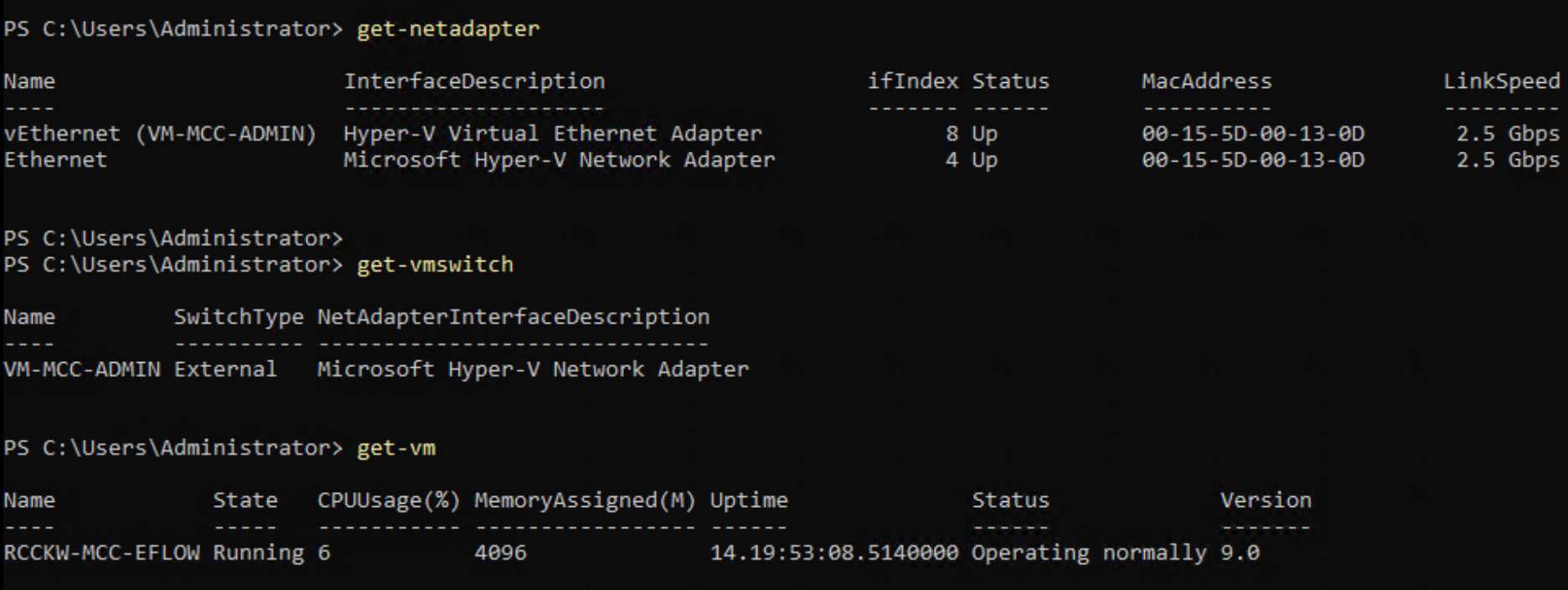

There are Azure and on-prem components involved and while standing them up isn’t difficult, it could certainly be streamlined. On the Azure side we start by setting up the instance of connected cache (only visible after enrolling in the preview) and creating a node which will correspond to the on-prem server. This generates an install script for the on-prem side which deploys a VM called EFLOW running Linux with Azure bits for containers (the snappily-named Azure IoT Edge for Linux on Windows). It’s this container which runs the on-prem connected cache service.

In my case I opted to run the script in a VM, since this keeps everything within that VM including the Hyper-V settings and virtual switch. I figured that way I can more easily blow it away and start from scratch (this is a preview after all). To do that, I enabled nested virtualisation on the host and everything else proceeded as per the docs.

So yeah, it’s a service in a container in a Linux VM running on a Windows VM on a Windows server; slightly convoluted but resulting in a clean setup:

Despite all the moving parts, the only caveat that tripped me up is that the service is currently only available in US datacentres; the process will fail if any others are selected.

Worth the wait?

It’s been a long time coming but so far I’m impressed. It’s not just the obvious bandwidth savings but the deployment scenarios that become possible when it only takes moments to bring a device up to date. Consider also the circumstances where we would prefer to enable client segmentation on a network for security reasons, but doing so would prevent delivery optimisation from using peering for its downloads and thus impose a massive bandwidth penalty; this fixes that.

The biggest problem at the minute is that the server is a black box; it’s not possible to tell what it’s caching, how busy it is, or even whether it’s up without running a series of commands on the VM.

I have a scheduled task to open a test object on the server on an hourly basis and sound an alarm if it fails. This URL will return the Windows 2000 logo (someone over at Redmond is having a laugh I think!):

http://<YourCacheServerIP>/mscomtest/wuidt.gif?cacheHostOrigin=au.download.windowsupdate.com

The MCC preview team at Microsoft have been very helpful and are keen on hearing feedback. They do have access to a basic stats dashboard and can pull them on request; that’s how I got the image of my stats above. A dashboard will reportedly soon be added to the preview and I’m hoping it will show this plus other useful areas such as:

- A bar graph showing overall throughput and average transfer rates.

- A bar graph showing different types of update and how much storage they account for (e.g. Windows feature, Windows security, Surface drivers, Intune apps, etc.).

- A list of most requested updates showing their vital stats (name, size, etc).

- A pie chart of storage volume by cache hits (if storage is maxed out by frequently requested objects then it’s probably time to increase it).

Just do it!

I used to run an Apple network and was a fan of their Content Cache (running on my 2006 Xeon Xserves - the halcyon days). It had a very simplistic process which as far as I know is still the way they do it today:

- Cache server starts up, contacts Apple Update Service and registers itself.

- Device checks for update and contacts Update Service to download it.

- Update Service checks the public IP address of the requesting client and compares with existing cache registrations. If it finds a match, it sends the client a redirection to the internal IP address of the cache server.

- Client contacts cache server directly and downloads update.

I remember discovering this for the first time and thinking what an utterly genius solution it was – caching with zero client configuration – and wondered when Microsoft would catch on and do the same.

Honestly, I’m still wondering. It’s not that MCC doesn’t also have a genius solution; it does! You can add the cache server’s IP to a DHCP option so every device gets it at the front door, can find it with zero configuration and support is built into Windows – but it won’t work without a policy to tell Windows to use it. It’s like the ball was kicked right up to the goal line and then abandoned 🤦🏽♂️

Imagine setting up a brand new Windows device that uses the cache server at OOBE to fetch updates before it even gets to checking whether it’s enrolled in Autopilot. Imagine unmanaged and BYOD machines we can’t define policy for using the cache automatically. Imagine that on guest networks – my school hosted a conference once and the biggest bandwidth drain was Windows updates!

I’m not despairing yet though. There’s another strand to the MCC preview; service providers. Given that an ISP can’t change settings on their customers’ devices, it simply must be zero-configuration:

Microsoft end-user devices (clients) periodically connect with Microsoft Delivery Optimization Services, and the services match the IP address of the client with the IP address of the corresponding MCC node.

Microsoft clients make the range requests for content from the MCC node.

I may not be reading that right but it sure sounds like Microsoft is matching clients to cache servers automatically. So here’s the question: if it can be automatic for some things then why not for everything?